Anything is possible. You can use rpy2 to run R code inside of python code or reticulate to do the opposite. You can also just have the R package available as well as the data files available separately.

I’d really just recommend doing what’s easiest for you.

Speaking for myself - honestly I just see this as a good corpus upon which to do an open-ended exploration of available techniques. I’m abstractly fascinated by NPL but I’m just not intrinsically curious about the typical use-cases shown in tutorials (Early 20th Century / late 19th century literature, and marketing / brand management).

Here’s a really trivial example - basically me just plugging the dataset in to the example code provided on the tidytext home page

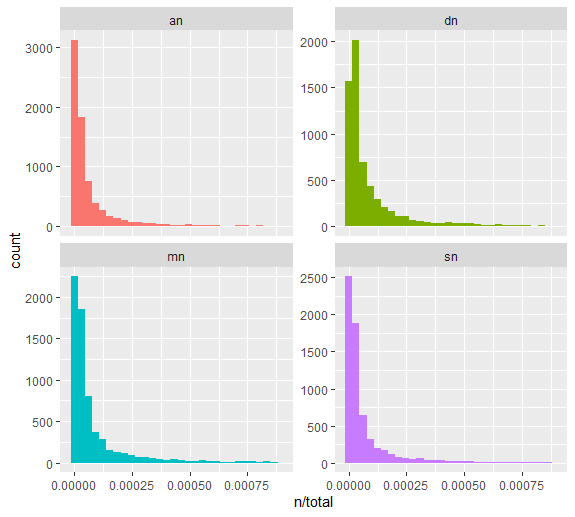

This is a basic sentiment analysis of the four nikayas across their “discourse number” (which for the AN is the number it’s the book of, e.g. the book of ones is discourse number 1)

Well, having read the AN, I immediately recognize why it has the greatest range in this measure of sentiment. Each “discourse” of the AN is just way longer than each “discourse” of the MN as this data set defines it. So the more interesting measure isn’t net sentiment but percentage positive sentiment.

So, here we see that sentiment is fairly even throughout the AN (hovering at around 50%, probably because the AN is exhaustive in trying to say everything in both the positive and negative form), and there’s some interesting patterns in the other nikayas. What’s that one spike in SN? My guess before looking it up is that it’s the inventory of the chief followers excellent qualities. Turn’s out I’m wrong - it’s SN34, the section on absorption. Interesting!

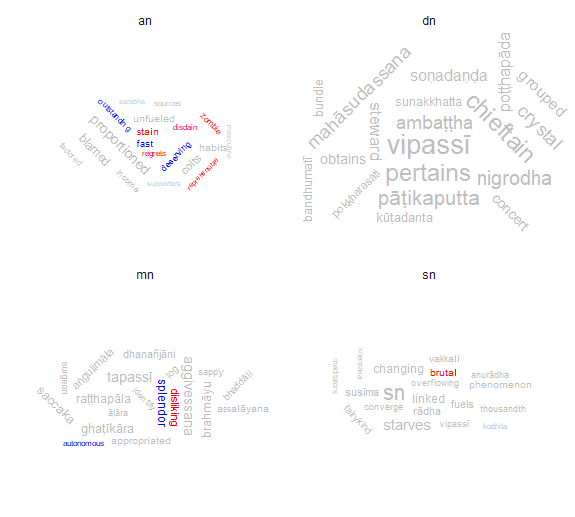

Here’s a messy graph of the comparison of word frequencies between the MN and DN, showing only words which are at least 0.01% of all the words in at least one nikaya.

So, we see overall a great deal of similarity. It looks like the word that’s most characteristic of the MN is “element” and the word that’s most characteristic of the DN is “sacrifice”. Huh.

This is all basic as can be, but personally I just find it intrinsically interesting.